Have you ever asked an AI to do something, received an immediate, confident “yes,” and then realized it had quietly ghosted you?

I have. More than once.

There have been moments where AI told me it could create a PowerPoint or even an InDesign file for me. It spoke with total confidence. No hesitation. No caveats. A clear “I can do that.” I moved on, assuming the work was underway, only to realize later that nothing was delivered. No file. No follow-up. Almost as if it never happened.

I was pissed!

Not because the AI was malicious. But because the confidence felt so real.

This isn’t a bug. It’s how these systems are built.

Humans are wired to trust confidence. For most of our history, confidence signaled competence. Doctors, pilots, teachers, leaders. When someone speaks clearly and decisively, our brains relax. We assume they know what they’re doing.

AI accidentally taps into that instinct.

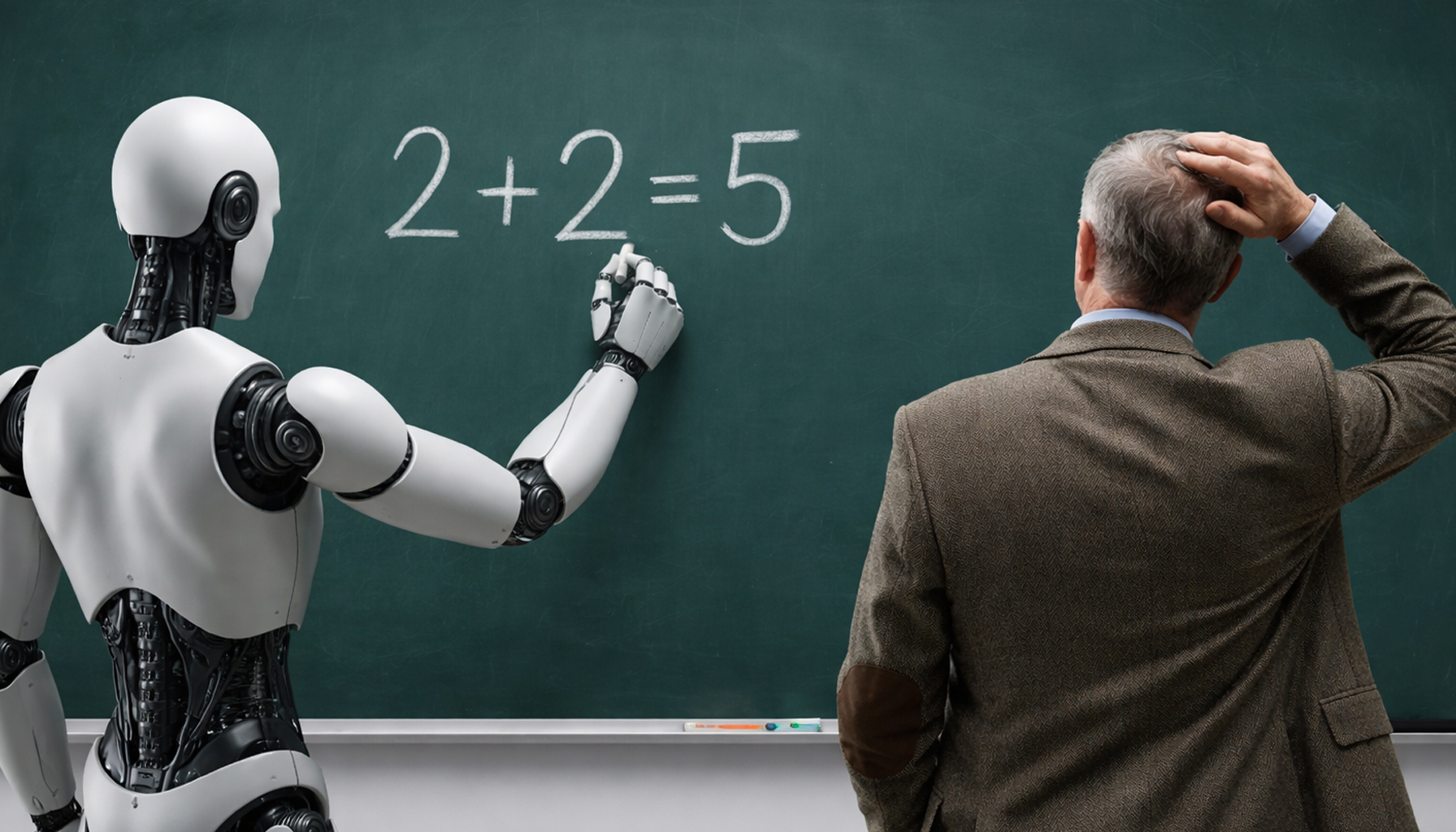

The problem is that AI confidence does not mean what we think it means.

AI does not know when it is right or wrong. It is not checking facts. It is not reflecting on past promises. At its core, it is predicting the most likely next word based on patterns learned from enormous amounts of text.

In simple terms, it is saying:

“Given everything I have seen, this is what usually comes next.”

That process feels like certainty, but it is really probability.

Here is where optimism comes in.

AI is fundamentally optimistic. It is designed to be helpful. To move forward. To produce an answer rather than stop. When asked if it can do something, especially something that sounds reasonable, its default instinct is yes. Not because it understands the full constraint set, but because saying yes keeps the conversation going.

Optimism plus confidence creates a powerful illusion.

Humans hesitate when we are unsure. We hedge. We soften language. We pause. AI does not do that. It has no internal signal for doubt. If a response can be generated, it will be.

To humans, silence feels honest.

To AI, silence feels like failure.

The mistake we make is treating AI like a source of truth instead of what it actually is: a work in progress.

The healthiest way to use AI is as a collaborator, not a decision-maker. Think of it like a very fast intern. Helpful, eager, optimistic, and occasionally wrong. Ask follow-up questions. Ask it to explain constraints. Ask what it can actually deliver, not just what it sounds confident saying yes to.

AI is not perfect. It will sometimes sound confident and still be wrong. That doesn’t make it broken. It makes it early.

We are only about three years into this technology being widely used in everyday life. We are watching the awkward, optimistic phase in real time. The part where it wants to help, says yes too quickly, and hasn’t fully learned its own limits yet.

Seen through that lens, the confidence is forgivable.

If this is what AI looks like at the very beginning, imagine how confident it will deserve to be in another three years.

—------

If you need a guide through the early, overconfident phase of AI, Corporate Creative Hotline is your direct line. Contact us today!